By Steve Martin, Head of Sales, Major Markets Australia

At NEXTDC, we've meticulously planned to stay at the forefront of the Generative AI wave, crafting our infrastructure for the high-density compute workloads of today and tomorrow.

Given the high-value content and research presented by various industry leaders at the recent CRN Pipeline Channel Conference on the Gold Coast, our commitment to being prepared will serve us and our customers well.

AI will transform ordinary into extraordinary

During his keynote address on insights into Generative AI, Trevor Clarke, Co-Founder and Asia Pacific Research Director at Tech Research Asia, reported that 51% of enterprises are either actively implementing or planning AI initiatives.

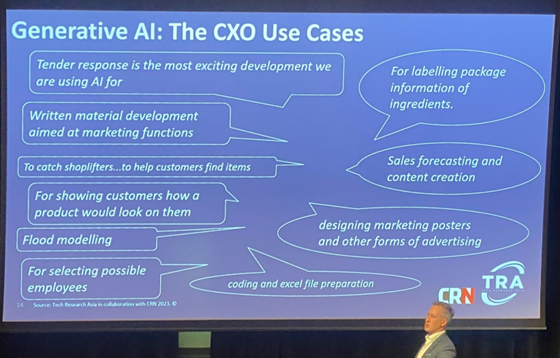

I suspect that, like most conference attendees, I was especially fascinated by a slide Trevor presented called Generative AI: The CXO Use Cases, which brought to life AI’s potential for not only enabling the extraordinary but transforming the ordinary.

The implications of Generative AI and the thought process that the channel needs to embark on when considering an AI future will feature a significant reliance on the cloud. Those who are going to take AI seriously (and I can think of few exceptions) will need to consider either delivering it as a service or deploying complete dedicated AI cloud platforms for their customers.

As businesses seek to leverage the untold benefits of Generative AI, they will start quickly with a handful of workloads, likely running in the cloud, and no doubt quickly increase to hundreds of workloads across all aspects of their organisation.

"Therein lies the challenge. While the value of AI is undoubted, the expense of using it in the cloud will quickly create a questionable ROI. This begs the question, 'How do you maximise the benefit of AI whilst minimising the costs?”

In this rapidly evolving landscape of AI readiness, Data centre infrastructure plays a pivotal role. The efficient handling of high-density compute workloads in sustainable data centres can significantly impact AI cost optimization. Moreover, cloud-based AI solutions are becoming increasingly essential for enterprises aiming to deploy AI at scale. To address the challenge of AI cost optimization, businesses must explore effective AI deployment strategies and invest in power-efficient AI platforms.

The profound influence of AI on businesses is undeniable, and as the demand for AI services continues to rise, companies must consider innovative approaches to ensure they reap the full benefits of this transformative technology.

A question of cost and value

To leverage the power of Generative AI effectively and profitably, it needs to become the brain of your organisation and connect to everything that’s going on in your business. It also must be nurtured and developed by constantly training and educating the AI brain and your staff.

The “AI brain” is a good way to think about AI. The more your Generative AI system knows about your business, the more value it delivers. It will become your own private version of HAL, or J.A.R.V.I.S. It will operate at the core of your IT infrastructure and with continuous learning, know everything about your business and provide insight into all aspects of your operations.

There seem to be few limits to the goodness that Generative AI can and will bring to how we do business - from coding to generating tender responses or writing marketing material, catching shoplifters or controlling traffic with video AI, showing customers how a product would look on them virtually, right through to employee selection.

For example, imagine a national fresh food retailer with hundreds of thousands of SKUs (representing billions of dollars) and complex national and international supply chain. Their objective is to both “never run out of stock” and “reduce food wastage” with just in time ordering and delivery logistics.

Balancing these, and many other metrics is hard enough on a normal day, but they also need to factor in many variables such as weather patterns, supply seasonality, supplier resiliency, school and public holidays, local events, pandemics, etc, and do all this across hundreds of stores across Australia. Using AI to manage stock forecasting and logistics to coordinate ordering and delivery will minimise wastage through spoiled produce and allow for every variable based on historical data to make more intelligent decisions. Ultimately there are tens of millions of dollars to be saved by managing this supply chain in the most effective manner possible.

That sort of intelligence is genuinely transformative and highly valuable – but it doesn’t come overnight. It would potentially require thousands of different AI workloads, tracking each geographical location, local demand, local events, local weather, combined with local growers, forecast crop yields and supply issues to forecast the best result for each store. Every workload, continuously learning from thousands of data points to maximise optimisation. It also leads to consideration on how to best optimise your Generative AI costs.

The new power-hungry platform

One of the major challenges of AI as a category is power.

Importantly, Generative AI platforms will use significantly more electricity than a normal compute platform. It’s already been calculated that, in an average month, ChatGPT uses as much electricity as 175,000 people. With ChatGPT representing perhaps 2% of the overall Generative AI usage picture, the cost of powering AI will be huge – and cloud providers will need that expense to be recoverable from users.

Although the cost to run AI will likely be breathtaking for some, but so will the benefits and savings. Questions that took minutes or hours to find answers to pre-AI will be answered in seconds. For example, the potential to improve call centre performance will be noticeable and offer a truly competitive edge to those who invest in AI.

Whilst it is still early days, it has been suggested that around 50% of Generative AI workloads will run in public clouds and the remaining 50% in private clouds or customer owned IT. Furthermore, the more extensively you commit to AI solutions, the more likely you are to run them inhouse due to cost, security and sovereign requirements. However, due to the extensive power requirements of AI, very few customer data centres will have the scale and power density to manage the size of workloads they will generate.

The importance of AI-ready data centres

As mentioned at the beginning of this blog, NEXTDC has invested in making sure we’re well ahead of the curve.

However, if your data centre hasn’t already prepared for AI over the last decade, they – and you and your customers - will be quickly disadvantaged. AI platforms require more power in a small amount of space, so your data centre needs to be built to handle higher-density power workloads and manage extremely high bandwidth data requirements, between your systems and your cloud instances – every minute of the day. You also need to ensure you have the ability to supply the required power into your data centre.

Given the volume of energy consumed and the heat generated, environmentally efficient cooling is another aspect to consider and may include liquid to chip cooling or even fully immersed solutions.

A further consideration is the weight of an AI system. Raised floor environments become a problem when you are dealing with rack weights north of 1,000kg as do the logistics of physically getting these systems into your data centre.

Solution expertise will be essential

AI requires its own hardware and software, so it can be difficult to pull all the relevant stakeholders together to deliver a solution.

For the channel, the challenge will be to become experts on the software stack relevant to customers’ needs. Selecting the right models for each customer workload and helping them to think about other use cases they haven’t yet considered.

However, the bigger discussion is the consulting engagement, where the real business problems are laid out. On the one hand, there are the issues your customers or your business wants to solve; on the other, there are opportunities for new business and revenue streams that can help them – or you – leapfrog ahead of the competition.

What’s next?

The next few years are likely to see a strong uptake in demand for Generative AI platforms. In our world, orders from hyperscale cloud providers over the last decade have grown 10-fold, and we expect similar demand for AI-specific platforms.

Cloud providers are competing for that big first-mover advantage. Currently, the largest AI platform in the world (AWS) is in the US – meaning that local AI workloads go to America for computing. With Australia's data sovereignty regulations applied to critical infrastructure industries, this will pose a problem for many and an opportunity for others.

If there’s one thing that CRN Pipeline Channel Conference highlighted, it is the sheer volume of and interest in AI discussions and the upscaling required to leverage new transformative technologies.

Many channel partners will be just at the early stage of working out how they might use AI in their own business and, more importantly, how they might help their customers’ AI strategies – so looking for AI-ready partners (like NEXTDC) will be critical.

NEXTDC are talking to the majority of vendors, partners and consultants in the AI space. If you aren’t sure where to start, reach out and let us connect you to our ecosystem partners who might be able to help you on your Generative AI journey